Why No One's Using Your AI Agent (and How Better UX Can Fix It)

Generative AI brings with it a new paradigm for designing software. Are you doing what's best for your users' experience?

If you’re new to this blog - welcome! I’m Snigdha, an AI developer who has developed and led AI products at startups (early stage and nearing IPOs) and enterprises.

On this blog, I'll be sharing insights on AI development, product leadership, and innovation - most of which result from AI projects and consultations with my clients at MindChords.AI.

Read more about why I started this blog - the inspiration and mission behind NextWorldTrio

Designing user experiences for generative AI products is nothing like what we've done before. As someone who's spent years developing custom AI solutions for businesses across industries, I've had a front-row seat to this transformation—and the challenges that come with it.

Gone are the days when software would reliably produce the same output given the same input. Welcome to the brave new world of non-deterministic AI, where each interaction might yield something different. Exciting? Absolutely. Challenging to design for? You bet.

Big thanks to Manvi Jaju for her valuable input on this piece!

Manvi is a human-centered designer and a senior at Srishti Manipal Institute of Art, Design and Technology, with a deep interest in conversational AI and UX. Sheʼs also building multiple entrepreneurial ventures—including one that empowers users to learn local Indian languages through voice-first technology, helping break everyday language barriers.

In this article, you’ll find:

Real-world challenges of designing UX for Generative AI products

Actionable steps to improve GenAI UX across your product touchpoints

A look at what it really takes to build human-centered AI experiences

Why GenAI and AI Agents need special treatment when it comes to UX?

What exactly defined traditional software products? You click a button, and you know exactly what will happen. A form will be submitted, an email would be sent, a few items would be added to your shopping cart. That predictability created a sense of control and reliability that users came to expect. But generative AI has flipped this paradigm on its head.

Image Source: OpenAI GPT-4o image generation

"I asked the customer support bot something and got two different answers. Which one is correct?"

“The AI assistant gave me step-by-step instructions yesterday, but today it says it can't help with that task. What changed?”

"Sometimes your AI writes amazing content for me, and sometimes it's mediocre. How can I make sure it's always good?"

"Your AI tool analyzed my data differently when I uploaded it a second time. How do I know which analysis to trust?"

"Yesterday the AI said my business strategy was sound, today it's identifying major flaws. Which feedback should I believe?"

These are the questions we get in user feedback reviews, especially from users new to AI interfaces. And each one is a fair question! When ChatGPT gives you a brilliant response one minute and a mediocre one the next—with the exact same prompt—it feels, well, confusing.

Major LLM chat providers - including chatgpt.com, google.gemini.com, claude.ai have addressed this with the simplest solution: a "regenerate" button. But is that really enough for your niche agentic product?

We have implemented some innovative approaches for our clients to solve this very problem. And let me give you a hint - the answer was not choosing better or bigger models, or the most popular agentic library or using MCPs or A2As.

It was designing a better UX.

The key insight: non-determinism isn't a bug—it's a feature. But we need to design interfaces that help users harness this variability rather than being confused by it.

To get a full whitepaper on techniques to tackle these UX challenges and real life examples of how we tackled these for our clients, comment on this post or contact us at hello@mindchords.ai, and tell us what you’re building.

Nailing Product Messaging: Communicating AI's Inner Workings Without the Jargon

"Just make the AI write better personalised emails."

If I had a dollar for every time I've heard a request like this, I could retire early (not that that’d ever be my goal ;) ). The challenge? Users often view AI as either magical (it can do anything!) or binary (it either works perfectly or it's useless).

The reality, as we know, lives in that messy middle ground. AI has specific capabilities, limitations, and varying degrees of confidence in its outputs. But how do we communicate this without turning product interfaces into AI textbooks?

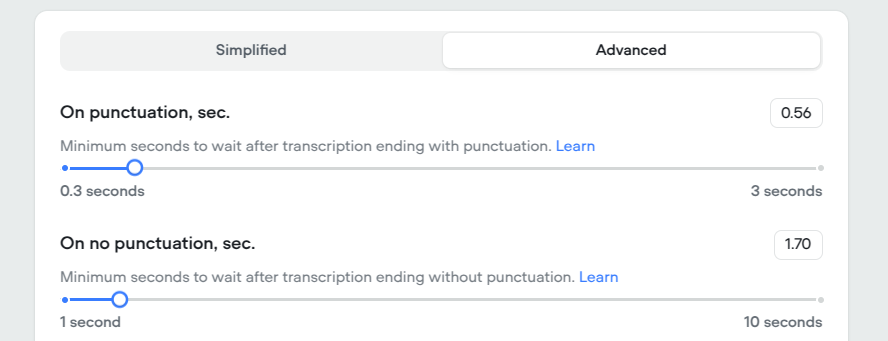

Here's what doesn't work: dumping technical jargon on users. Nobody wants to adjust "temperature parameters" or "max tokens" if they don't understand what those things are. Even those who somehow do feel “powerful” with the idea that they can tweak these - they rarely understand what is best suited for their use case and what setting goes best with other parameters.

Instead, we need to translate complex AI concepts into human terms:

What Works Better:

Confidence indicators: Visual cues that show when the AI is certain versus when it's speculating. Although, getting these confidence scores accurately is in itself a long discussion in itself.

Plain-language explanations: "This answer is based on data up to 2023" instead of "data cut off". Using the keyword “creativity” instead of temperature etc.

Progressive disclosure: Offering basic natural language controls by default with optional advanced settings for curious users

For example, what I liked about this voice agent platform was that it has both settings versions - for both types of users - layman and advanced:

The insight here is clear: transparent design builds trust. When users understand what the AI is doing—even at a high level—they're more forgiving of limitations and more strategic in how they use the tool.

How long would a human wait for AI to give back a response?

"I bought this AI tool to save time, but now I'm just staring at loading spinners all day."

This sentiment captures one of the biggest paradoxes in AI user experience: tools meant to automate work often create new forms of waiting and monitoring.

The "human-in-the-loop" paradigm is crucial for many AI applications—having people review, correct, or approve AI outputs before they go live. But poorly designed oversight mechanisms can make users feel like glorified babysitters rather than empowered professionals.

The solution? A complete redesign of the workflow:

Batch processing with notifications: Rather than making users watch the process, we let them initiate it and then notify them when human input is required

Estimated completion times: Providing realistic timelines for AI processes so users can plan accordingly

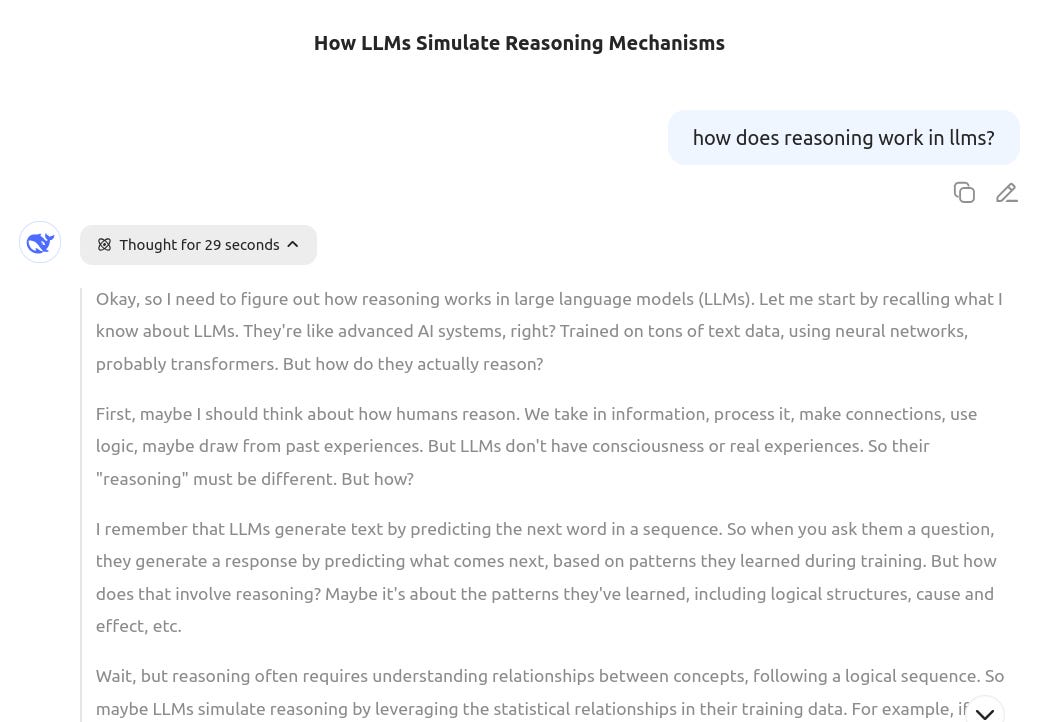

Revealing intermediate results: Showing intermediate results even as the system continues processing the full dataset. In fact in my discussion with Manvi, She described how DeepSeekʼs UI and interaction design made the modelʼs

thinking process visible—mirroring how humans think out loud. That transparency was exactly what users appreciated. These intermediate outputs not only help users critically evaluate the final results but also offer clues for verifying areas where the model may be uncertain.

When we apply these solutions to AI products, the result is often transformative. Instead of users feeling chained to the AI, they reported feeling like it was working for them. Productivity increased not just because the AI was analyzing documents, but because the human-in-the-loop elements were thoughtfully designed.

This points to a UX best practice design: respect users' time and attention. If your interface makes people feel like they're serving the AI rather than the other way around, you've missed the mark.

Trust But Verify: Countering Blind Faith in AI Outputs

"The AI said we should increase the tariffs, so he did it." - Iykyk 😀

Jokes apart, this kind of statement makes me wince. While AI can provide valuable insights and fodder for the mind and your research, the growing tendency for users to treat AI outputs as gospel truth is concerning—especially when those outputs can be wrong, outdated, or based on biased data.

We're seeing a troubling pattern: as AI interfaces become more polished and human-like, users' critical evaluation of the content decreases. The more confidently an AI presents information, the less likely users are to question it.

This creates a perfect storm for misinformation and wrong decisions. An AI assistant making up facts with absolute certainty is far more dangerous than one that's visibly struggling.

To deal with AI, like any powerful tool, you need to develop your skills to use it effectively. In case of AI, it is your critical mindset and evaluation capabilities. Or else consult with developers who understand AI’s limitations.

So how do we design for appropriate trust? Some approaches that are effective:

Source citations and references: Showing where information comes from helps users gauge reliability. Perplexity.ai already started this trend in the right direction.

Verification prompts: Explicity encouraging users to fact-check important information

The lesson? Design that encourages healthy skepticism creates better outcomes. Instead of maximizing blind trust in AI, aim for appropriate trust calibrated to the AI's actual capabilities.

Practical Steps for Better GenAI UX Design

So where do we go from here? If you're building or implementing AI systems for your organization, here are concrete steps to improve the user experience:

1. Audit Your Current AI Touchpoints

Where are users interacting with AI outputs in your product or service?

What expectations have you set about these interactions?

Where are users expressing confusion, frustration, or blind trust?

2. Implement Transparency Without Overwhelm

Add simple explanations of AI limitations in context

Provide confidence indicators for AI outputs

Develop consistent visual language for AI-generated vs. human-verified content

3. Rethink Human-in-the-Loop Workflows

Map the current time users spend waiting or monitoring AI processes

Identify opportunities for asynchronous review rather than real-time babysitting

4. Build Trust Verification Mechanisms

Add source citations where appropriate

Explicitly prompt the users to verify outputs where needed

The Future of GenAI UX Design

As generative AI continues to evolve, so too will our approaches to designing for it. We're already seeing promising developments.

But regardless of technological advances, the core principle remains: effective AI design puts human needs, limitations, and psychology at the center. The best AI experiences don't just showcase impressive technology—they solve real human problems in ways that feel natural, trustworthy, and empowering.

With that in mind, Manvi also pointed out an inevitable shift in how teams collaborate - especially developers and UX Designers. It wouldn’t be long before both developers and UX Designers work together to design the prompts and guardrails that power your GenAI applications - to ensure it solves a problem in a way that humans can actually benefit from.

The Human Touch in everything AI

The rush to implement AI capabilities has often left thoughtful UX design as an afterthought. Companies eager to add AI to their products have focused on the technology first and the human experience second. This approach inevitably leads to disappointed users, wasted potential, and sometimes even harmful outcomes.

What's needed is a fundamental shift in how we approach AI implementation—starting not with what the technology can do, but with how humans can best work with it.

This means:

Designing for appropriate trust rather than maximum trust

Creating interfaces that educate without overwhelming

Building workflows that respect human time and attention

Developing systems that augment rather than replace human intelligence

Understanding the emotional and psychological dimensions of human-AI interaction

At MindChords.AI, we've seen firsthand the difference this human-centered approach makes. Clients who prioritize thoughtful AI UX design see higher adoption rates, better outcomes, and more satisfied users than those who simply drop AI capabilities into existing products.

The future belongs not just to organizations that adopt AI, but to those that design AI experiences with genuine empathy and understanding for the humans who will use them.

Because ultimately, the point isn't to create AI that feels human—it's to create AI that helps humans be more effective, creative, and fulfilled. And that requires design thinking that puts people first.

Looking for expert guidance on designing AI experiences that your users will actually love? Our team specializes in creating custom AI solutions with human-centered design at their core. Contact us at hello@mindchords.ai to discuss how we can help transform your AI implementation from a technical showcase into a truly valuable user experience.

To get a full whitepaper on techniques to tackle these UX challenges and real life examples of how we tackled these for our clients, comment on this post or contact us at hello@mindchords.ai, and tell us what you’re building.