Models Models Everywhere: How to Choose the One for You

The Five Critical Factors to Choose LLM for your use case (And Which Models Win)

If choosing AI models to work with has started to feel like choosing between 47 different types of toothpaste - you’re not alone.

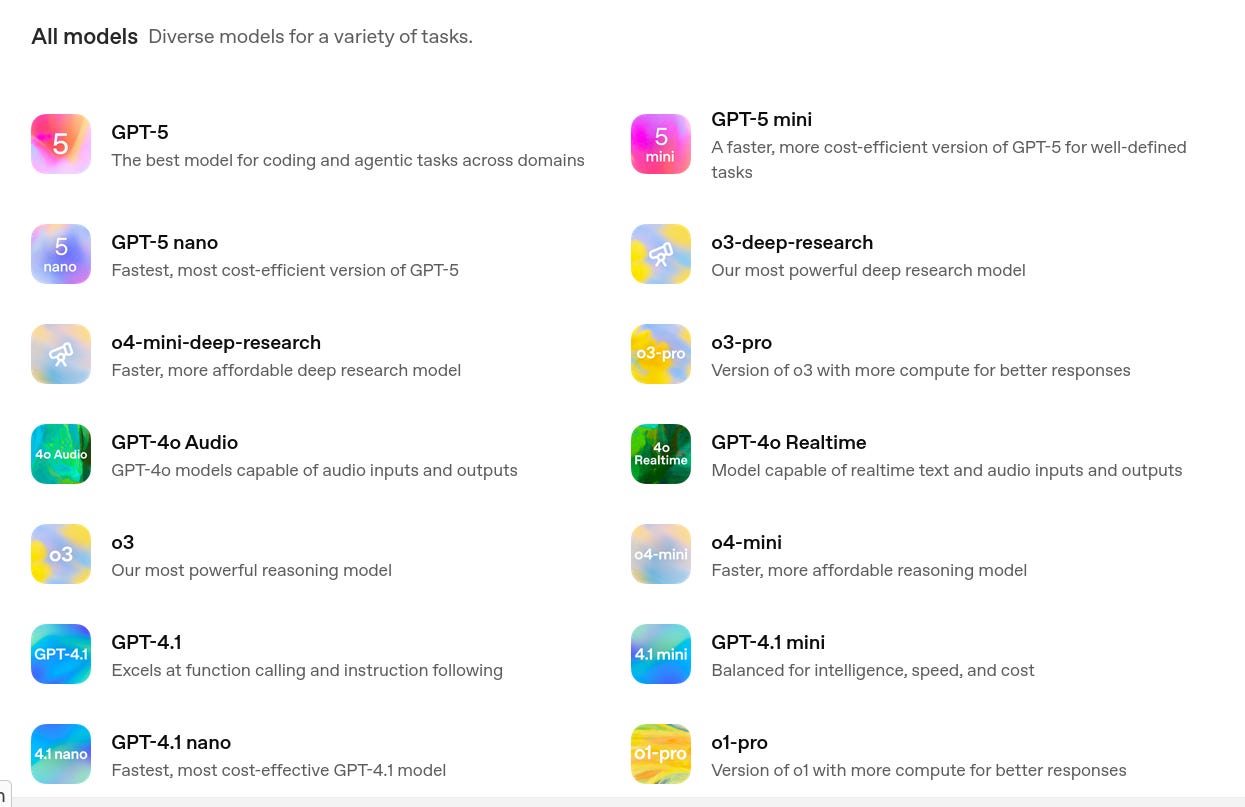

If you’ve recently started building with generative AI, you’ve probably experienced that overwhelming moment when faced with the dizzying array of available models. GPT-family (GPT-3.5, GPT-4o, o3, o3-deep-research, GPT-5), Claude (Claude sonnet 3.5, 4, 4.5, Haiku, Opus), Gemini (2.5 pro, flash, flash-lite), Llama (3.1, 4, scout, maverick), Mistral (Small 3, small 3.1, large-2, medium-3, edge) ... the list seems to grow longer every week.

Here’s the reality: choosing the right AI model is not really rocket science, but it does require understanding what truly matters for your use case. Neither does it mean that you can only choose the model once and have to be stuck with it. In fact, best execution pipelines would allow you to switch to other newer models once they are released. But, it is still more practical to test 5 models for your use case than 58. So let’s cut through the marketing noise and get practical about which models actually work best for different scenarios.

1. Cost: From Budget-Friendly to Premium Performance

Ultra-Budget Champions ($0.04-$0.1 per million tokens):

Ministral 3B, 8B, Small 3.1 (from Mistral), cheap models are available across all modalities - voice, reasoning, coding - you name it.

GPT-5-nano, GPT-4.1 nano, gpt-oss-20b (open source, so available through other managed services as well) (from OpenAI),

Gemini 2.0 Flash-lite and 2.5 Flash-Lite (from Google) are some of the cheapest models

DeepSeek R1 distilled models - Cheapest open source reasoning model out there. Especially if you consider batch pricing.

Best for: High-volume simple tasks, repeatable tasks which are easy to finetune an LLM on, edge device use cases (open source Mistral models)

Mid-Range Value ($0.1-$1.00 per million tokens):

Gemini 2.5 Flash - Hybrid reasoning model with good multi-modal capabilities

Claude 3.5 and 3 Haiku - Fast responses, good enough reasoning for most tasks

GPT 4o Mini / GPT 4.1 Mini / GPT 5 Mini - Solid performance across most tasks

Best for: Most business applications, content generation, conversational AI

Premium Performance ($1-$5 per million tokens):

GPT-4o/4.1 - Good for complex tasks

Claude 4 Sonnet and 4.5 Sonnet - Excellent for coding, analysis and high quality conversational AI

Gemini 2.5 Pro - Strong multi-modal capabilities and long context

Best for: Complex reasoning, high-stakes content, specialized tasks, coding with human in the loop

AI assisted Knowledge Work or Complex Problem Solving ($5+ per million tokens):

Best for: Low volume, high complexity tasks with human in the loop for knowledge work and coding

OpenAI’s o1-pro

I guess it’s also worth mentioning OpenAI’s o1-pro ($150 per 1M input tokens and $600 per 1M output tokens) - which deserves a category of its own - and I have no idea what that category should be called.

This is not designed to be used for repeatable tasks, but rather as a potential “replacement” or “enhancement” that your knowledge workers can put to use for faster research, complex analysis, software development etc.

Whether this adds proportionate value in comparison to its cost has been debatable. So try at your own risk/budget appetite, before this model gets deprecated in favor of more practical ones …

2. Privacy & Security: Who Can You Actually Trust?

Maximum Privacy (Enterprise-Grade):

For building genAI applications

Google Vertex AI - a single, fully-managed, unified development platform for using Gemini models and other third party and open source models at scale. Google is responsible for providing secure infrastructure for its services, including physical security of data centers, network security, and application security. This includes compliance with applicable industry standards and regulations.

AWS Bedrock - Use Foundational models, tools for fine-tuning, with industry-leading security, privacy, and compliance for generative AI applications. Bedrock is in scope for common compliance standards including ISO, SOC, CSA STAR Level 2, GDPR, FedRAMP High, and is HIPAA eligible.

Microsoft Azure - For OpenAI models, flexible deployment strategies—Standard, Provisioned, and Batch—to tailor your plan to your business needs, whether that includes small-scale experiments or deploying large, high-performance workloads. Other foundational models are available as well. Available with 100+ compliance certifications when you need them.

Anthropic’s API - This allows you to get started in no time, with most compliances being followed here - including SOC 2 Type 2, HIPAA. The compliance list can always be verified here.

OpenAI’s API - No training on your data, Zero data retention policy by request, Business Associate Agreements (BAA) for HIPAA compliance, SOC 2 Type 2 compliance.

For AI Assistants:

Gemini Assistant for Google Cloud and Google Workspace - For advanced AI coding and enhanced productivity in Google Suite - Docs, Sheets, Mail, Drive etc. Your organization’s data is protected. It is not used to train the general Gemini models. Content and prompts stay within the organization and are not shared with other customers.

Claude for Enterprise - No training on your data, enterprise-grade security features—like SSO, role-based permissions, and admin tooling—that help protect your data and team.

ChatGPT for Enterprise - OpenAI’s data protection practices support your compliance with GDPR, CCPA, and other privacy laws, and align with CSA STAR, SOC 2 Type 2 Trust Services Criteria, and ISO/IEC 27001, 27017, 27018, 27701 certifications.

IBM Watson and Microsoft Azure AI stand out for enterprise-grade security and regulatory compliance

Reality Check: 60% of the knowledge workforce limits their AI use because they’re worried about data privacy and security - but most concerns can be addressed with the right provider choice.

3. Speed & Latency: When Every Millisecond Counts

Lightning Fast (<500ms):

Nova Micro from AWS

Gemini 2.0 Flash-lite and 2.5 Flash-Lite (from Google)

Llama 4 Scout

GPT-5-nano, GPT-4.1 nano(from OpenAI),

Ministral 3B, 8B, Small 3.1 (from Mistral)

Perfect for: Real-time chat, live coding assistants, interactive apps - without complex logic or guardrails

Balanced Speed & Quality (1-3 seconds):

GPT-(5 or 4.1 or 4)-Mini - Good balance of speed and capability

Gemini 2 and 2.5 Flash - Fast with multimodal support

Claude 3.5+ Sonnet - Quick for most tasks

Perfect for: Content generation, analysis, most business applications

Quality Over Speed (5+ seconds):

GPT-o3 - Maximum reasoning capability

Claude Opus Series - Deep analysis and complex tasks

Gemini Pro Series - Comprehensive multimodal processing

Perfect for: Research, complex analysis, high-stakes decisions

4. Capabilities: Matching Models to Your Tasks

Code Generation:

Claude Sonnet Series - Consistently rated best for coding

GPT-5 - Strong across multiple languages

Codestral (Mistral) - Specialized for development tasks

Writing & Analysis:

Claude Sonnet Series- Excellent for long-form content, breaking down complex data

GPT-4o/5/4.1 - Versatile writing styles, strong reasoning for most tasks

Gemini Pro - Good for creative writing, good with charts and visual data

Multimodal (Text/Audio/Image/Video as input) Leaders:

Gemini 2.5 Pro/Flash - Strong image and video understanding, with text, audio, image, video, pdf supported in inputs and text-only output

Claude 3.5 and later models - Supports image, text and pdf documents as inputs

Most OpenAI models - 4o, 4.1, 5 series support text, pdf and image as input

GPT-realtime - for real time voice based conversational ai, image is supported in inputs

Long Context Champions:

Llama 4 Scout (10M) context window - Handles massive documents (How well - is kind of debatable)

Gemini 2/2.5 Pro (1M)

Llama 4 Maverick (1M)

OpenAI GPT-4.1 (1M)

Claude 4.5 Sonnet (1M is available in beta)

5. Flexibility & Future-Proofing: Avoiding Vendor Lock-In

Multi-Provider Friendly:

OpenAI Compatible APIs: Many providers (Together AI, Fireworks, etc.) offer OpenAI-compatible endpoints

Hugging Face Models: Easy to switch between open-source options

Anthropic’s Claude: Available via multiple channels (direct, AWS, GCP)

Self-Hosting Champions:

Llama 4 Scout/Maverick - Meta’s open-source flagship

Mistral AI - Mistral’s open-source offerings

Qwen 2.5 - Strong open alternative from Alibaba

The Bottom Line: Start Smart, Stay Flexible

For Most Businesses: Start with Claude Sonnet for quality tasks and GPT-4o/4.1/5 Mini for volume. This combo covers 90% of use cases while keeping costs reasonable.

For Budget-Conscious Teams: GPT-4o/4.1/5 Mini gives you flexibility without breaking the bank.

For Enterprises: Google Vertex AI + AWS Bedrock + Azure OpenAI provides the security and compliance most large organizations need.

The Universal Truth: The best model is the one you actually implement. Don’t spend months in analysis paralysis – pick a solid option from this guide, start building, and iterate based on real usage.

Remember: Every successful AI implementation started with someone picking a model and getting their hands dirty. The landscape will keep evolving, but the organizations winning with AI are the ones that started yesterday, not the ones still researching tomorrow.

If you found this article useful, please show your support by liking, commenting or sharing this with your network!

Looking to build something custom for your business but struggling to see the light at the end of the tunnel of experimentation? We help founders bridge the gap between ideas and implementation. From figuring out what to build to scaling your AI initiatives, we’ve got the expertise to make your vision make its way to real users (and real money :) ).

Get in touch – let’s build something amazing together.

If you’re new to this blog - welcome! I’m Snigdha, an AI developer who has developed and led AI product development at startups (early stage and nearing IPOs) and enterprises. I now help teams like yours build and scale AI solutions, at MindChords.AI.

Read more about why I started this blog - the mission behind NextWorldTrio